Information extraction in Scala with Odin (NLP/NLU)

1 Feb 2020 • 6 min read

There are a lot of tools out there that help extract information from text, and in this post I hope to cover one which I’ve found to be super useful. Let me introduce you to Odin.

Odin is tool coming out of the CLU lab lead by Mihai Surdeanu at the University of Arizona. And it’s part of a bigger set of tools called “Processors” that deals with taking natural language and assigning meaning to it. I personally don’t have any stake in Odin, I just found the tool useful and wanted to share how to use it and what you can accomplish with it. You can read the Odin Manual here.

We at Goodcover use it to help identify intents when we are working with automated information gathering, aka Conversational UI. Goodcover is building an Insurance carrier to be more fair to customers and part of that mission involves building fair and automated systems.

You can follow along with the Jupyter notebook here

Abstractions

Processors is the framework upon which Odin is built. Processors abstracts an underlying representation of common NLP related concepts such as parts-of-speech tags, sentence dependencies, and words to create tagged bits of information. Right now it supports CoreNLP from Stanford and a custom Implementation. I’m going to use the CoreNLP version for now, but either will work with Odin.

Odin then uses the output of processors along with a YAML rules file to extract different types of mentions (Odin parlance) to extract bits of information out. So you must first annotate a Document, then run the ExtractorEngine’s instance over the document to get the relevant mentions. It’s all mutable, but at least it’s contained.

For this blog post, we are focusing on Odin, however, you can read more about how Processors works by visiting their Github page.

Prerequisites

If you want to follow along I will have a repository up. You’ll need install Jupyter notebook running Scala and then you’ll be ready to go! You could also just copy and paste the code and follow along with Ammonite too, or just a normal Scala project.

Here we go.

Problem

We want to semantically understand a sentence, so let’s start with pulling out a dependent object. Straight from Wikipedia:

Traditional grammar defines the object in a sentence as the entity that is acted upon by the subject.

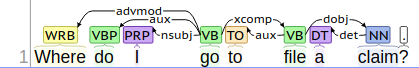

So how do we do that? Well we need to look at syntax dependencies (learn more about Stanford dependencies, note we are not yet using Universal Dependencies, but the team has plans to upgrade). Let’s look at how we’d represent that in Odin. So in the following sentence we’d expect claim to be the dependent object.

Where do I go to file a claim?

Let’s look at the syntax visualization (you can play with your own sentences here)

Great, as expected it tells us that claim is the dependent object of the verb. Let’s figure out how we translate that into code.

Getting Started

Let’s set everything up so we test out Odin. First set up the imports, since it’s pulling down models, it might take a bit. This set of declared dependencies is specific to Jupyter-Scala, Apache Zeppelin or Ammonite declare dependencies differently, so you’ll potentially need to replace this.

This gets all the dependencies we’ll need in place.

Next, we instantiate the individual components. They are lazy so we’ll warm them up. Now they’ll be ready to extract mentions from the annotated document.

This should succeed, even if you see standard error output from the Standard CoreNLP toolkit. This is subsequently fixed in later versions of CoreNLP.

Helper Code

Here’s a snippet of helper code. It is too long to include inline, but it prints out the document dependencies and mentions. We will be using NLPPrinter for introspection of the results. It’s included in the notebook, so if you’re following that way it works out of the box.

Odin Finally

On to the exciting stuff, let’s instantiate a single rule for Odin per our above problem statement, how do we find dependent objects in a sentence?

Below I’ve created a rules string that holds a yaml formatted string that looks for a token, where the incoming relationship is dependent object (dobj).

What do some of these attributes mean?

- Priority — This matters when we look at dependent rules, so If I have a mention that is a dependency (not “tag” like below), it needs to to run before we can match on it, lower numbers run first.

- Type — looking for a token

- Unit — the default thing to look over, so tag (part of speech) vs word

- Label — a list of labels or a taxonomy (read more about in the Odin manual)

- Pattern — Yaml is goofy so the syntax is a bit wonky, but it’s looking for any part-of-speech tag that begins with N, in other words any form of a noun. The slashes denote regular expressions and the basic unit is a tag (part of speech tag).

Sure enough this gives us the desired result! Here’s our NLPPrinter in action printing some of the information Odin and Processors returns back.

sentence #0

where do I go to file a claim ?

Tokens: (0,where,WRB), (1,do,VBP), (2,I,PRP), (3,go,VB), (4,to,TO), (5,file,VB), (6,a,DT), (7,claim,NN), (8,?,.)

roots: 3

outgoing:

0:

1:

2:

3: (0,advmod) (1,aux) (2,nsubj) (5,xcomp)

4:

5: (4,aux) (7,dobj)

6:

7: (6,det)

incoming:

0: (3,advmod)

1: (3,aux)

2: (3,nsubj)

3:

4: (5,aux)

5: (3,xcomp)

6: (7,det)

7: (5,dobj)

entities:

List(Object) => claim

------------------------------

Rule => obj

Type => TextBoundMention

------------------------------

Object => claim

------------------------------

events:

==================================================As you can see word 7 (claims) has an incoming dependent object relationship (dobj). And our sentence is correctly parsed. Let’s try something more complex.

Complex Example

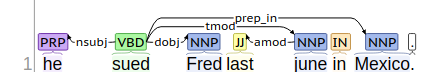

To take advantage of this system, we need to look at something more complex. We are going from beginner to advanced, so hang on.

he sued Fred last june in Mexico.

So we’d like to know if someone get’s sued, where it happened, when it happened, and who was involved.

We’ve introduced some new attributes below which represent some new Odin concepts.

- Type — We’ve added a dependency type which looks at a trigger and assembles multiple text mentions.

- Priority — It’s higher since we want it to run after it’s identified the entities.

- Additional attributes — claimant and defendant, both entities need to have been tagged one or more times (+), the syntax is borrowed from regex.

- [tag=/^V/ & lemma=”sue”] — the pattern kicks off with a verb, that has the lemma “sue”, lemma just means the root of the word, so you don’t have to worry about different word forms. lemma(“sued”) == “sue" . Lemmatization is a more advanced and “correct” version of stemming which you can read about here.

- <xcomp? — So the left caret means incoming, and the ? means it’s optional. xcomp however allows us to look beyond a verb like "seems" in "seems to believe" or “file a claim”

Success!

Let’s look at the results.

- last june — was flagged as an entity by the CoreNLP named entity recognizer, in the code you can see a normalized representation so it’s machine parse-able.

- Mexico — flagged again as a location by NER (named entity recognizer).

- Claimant and Defendant — Both filled in, yay! It recognized the root word of sued is sue, and was able to use sentence dependencies to fill out the supporting text mentions.

sentence #0

he sued Fred last june in Mexico .

Tokens: (0,he,PRP), (1,sued,VBD), (2,Fred,NNP), (3,last,JJ), (4,june,NNP), (5,in,IN), (6,Mexico,NNP), (7,.,.)

roots: 1

outgoing:

0:

1: (0,nsubj) (2,dobj) (4,tmod) (6,prep_in)

2:

3:

4: (3,amod)

5:

6:

incoming:

0: (1,nsubj)

1:

2: (1,dobj)

3: (4,amod)

4: (1,tmod)

5:

6: (1,prep_in)

entities:

List(Date) => last june

------------------------------

Rule => ner-date

Type => TextBoundMention

------------------------------

Date => last june

------------------------------

List(Entity, Subject) => he

------------------------------

Rule => subj

Type => TextBoundMention

------------------------------

Entity, Subject => he

------------------------------

List(Entity, Object) => Fred

------------------------------

Rule => obj

Type => TextBoundMention

------------------------------

Entity, Object => Fred

------------------------------

List(Location) => Mexico

------------------------------

Rule => ner-location

Type => TextBoundMention

------------------------------

Location => Mexico

------------------------------

List(liability) => he sued Fred last june in Mexico

------------------------------

Rule => liability-syntax-1

Type => EventMention

------------------------------

trigger => sued

claimaint (Entity, Subject) => he

defendant (Entity, Object) => Fred

location (Location) => Mexico

------------------------------Conclusion

Odin allows you to leverage all the best parts of natural language processing by putting together concepts (triggers, named entity recognition, parts-of-speech tagging, syntactic dependencies, etc) in a concise rule framework to declare what kind of data or relationships you want to pull out.

It’s really as complex or as simple as you want, since you define the rules and what’s important. As mentioned earlier, the Odin web frontend can you help you explore this more interactively.

Natural Language Processing is fun.

Check out the jupyter notebook here.

Also Goodcover is hiring! Come join us and work on making insurance fair and fun.

More stories

Dan Di Spaltro • 27 Jun 2025 • 20 min read